Project Description

Publications

| Acceptance Rate: 26.2% (33 / 126)

Talks

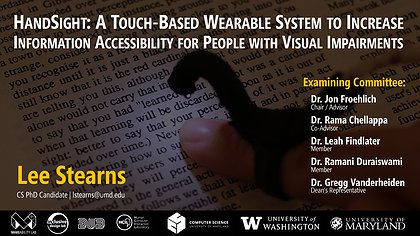

Aug. 1, 2018 | PhD Defense, Computer Science

University of Maryland, College Park

April 6, 2017 | Lecture Series at the Laboratory for Telecommunication Sciences

LTS Auditorium, College Park, MD

PDF | Video | HandSight • MakerWear • Project Sidewalk • GlassEar • Scalable Thermography • BodyVis

Nov. 7, 2016 | Diversity in Computing Summit 2016

College Park, Maryland

Oct. 26, 2016 | ASSETS 2016

Reno, Nevada

PDF | PPTX | SlideShare | HandSight