HandSight

Project Description

HandSight augments the sense of touch in order to help people with visual impairments more easily access the physical and digital information they encounter throughout their daily lives. It is still in an early stage, but the envisioned system will consist of tiny CMOS cameras and micro-haptic actuators mounted on one or more fingers, computer vision and machine learning algorithms to support fingertip-based sensing, and a smartwatch for processing, power, and speech output. Potential use-cases include reading or exploring the layout of a newspaper article or other physical document, identifying colors and visual textures when getting dressed in the morning, or even performing taps or gestures on the palm or other surfaces to control a mobile phone.

Publications

Investigating Microinteractions for People With Visual Impairments and the Potential Role of on-Body Interaction

| Acceptance Rate: 26.2% (33 / 126)

Talks

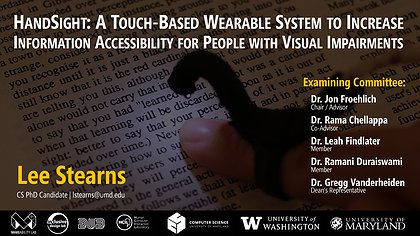

HandSight: A Touch-Based Wearable System to Increase Information Accessibility for People with Visual Impairments

Aug 01, 2018 | PhD Defense, Computer Science

University of Maryland, College Park

Making with a Social Purpose

Apr 06, 2017 | Lecture Series at the Laboratory for Telecommunication Sciences

LTS Auditorium, College Park, MD

Interactive Computational Tools for Accessibility

Nov 07, 2016 | Diversity in Computing Summit 2016

College Park, Maryland

Evaluating Haptic and Auditory Guidance to Assist Blind People in Reading Printed Text Using Finger-Mounted Cameras

Oct 26, 2016 | ASSETS 2016

Reno, Nevada